La Fondation Cuomo s'engage dans la lutte contre le cancer du sein et pelvien en Afrique

12 Avr 2024

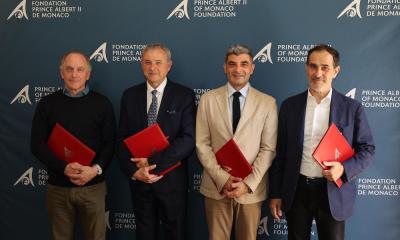

La Fondation Cuomo s’associe à l'AME-International, au Centre Hospitalier Princesse Grace (CHPG) et au Gouvernement Princier, représenté par la Coopération monégasque, dans un partenariat inédit visant à améliorer la détection et le traitement du cancer du sein et des cancers pelviens en ...

L'eau pour la paix : la Journée mondiale de l’eau 2024

22 Mar 2024

Aujourd'hui, c'est la Journée mondiale de l'eau. Cette année, l'événement est axé sur le thème "L'eau pour la paix".

L'inclusion du scientifique soutenu par la Fondation Cuomo dans deux nouveaux programmes de soutien à la recherche

02 Fév 2024

Opportunités de compensation carbone pour l'élevage bovin dans les forêts sèches d'Amérique du Sud…

Les salons culturels itinérants de Marcella Crudeli

19 Jan 2024

Depuis plus de 14 ans, Maestro Marcella Crudeli organise tous les mois une rencontre culturelle qui allie la musique à l’intervention d’un invité d’honneur qui vient partager, devant un public fidèle, ses connaissances dans son domaine de prédilection.

La Fondation Cuomo soutient Monaco Disease Power dans son nouveau projet pour les adultes TSA

18 Jan 2024

La Fondation Cuomo vient de signer un partenariat avec l’association Monaco Disease Power (MDP), officialisant ainsi la réalisation d’une structure d’accueil pour adultes avec TSA (trouble du spectre de l’autisme).

La Fondation Cuomo apporte son secours aux sinistrés du cyclone Michaung en Inde

04 Jan 2024

La Fondation Cuomo a accordé 11 000 Euros au diocèse de Chingleput en tant que fonds d'aide aux inondations

Communiqué de la Grande chancellerie de la Légion d’honneur – 13/12/2023

14 Déc 2023

Lors d’une rencontre à la grande chancellerie, Maria-Elena Cuomo, présidente de la Fondation, et le général François Lecointre, grand chancelier de la Légion d’honneur, se sont réjouis de ce nouveau partenariat qui fait suite à un premier mécénat de 2022…

Un magnifique concert au cœur de Rome pour la 32ème édition du Concours international de Piano "ROMA"

27 Nov 2023

La 32ème édition du Concours International de Piano "Roma", organisée par l'Association Culturelle "Fryderyc Chopin" et soutenue par la Fondation Cuomo, s'est déroulée dans le cadre flamboyant de l'Oratorio di San Francesco del Caravita, édifice baroque érigé en 1631. Accompagnée de membre...

Dr Pedro Fernandez promu Ambassadeur de "One Young World"

09 Nov 2023

« Un grand merci à la Fondation Cuomo d'avoir été un soutien au début de mon parcours de développement ! »

Le sacre de deux jeunes pianistes à l’édition 2023 du Concours international de piano "Roma"

06 Nov 2023

Le jeune prodige allemand d’origine congolaise, Joel Madaliński-Artur, a été distingué comme le meilleur « Jeune pianiste », tandis que l'Italien Massimo Urban a remporté ce matin le prix du « Pianiste émergent »…

La Fondation Cuomo et l’Association Culturelle F. Chopin inaugurent la 32e édition du Concours International de piano « Roma »

26 Oct 2023

Le 16 octobre dernier, la salle Protomoteca de la mairie de Rome a été le cadre de la conférence de presse annonçant le 32ème Concours International de Piano "Roma", organisée par le Maestro Marcella Crudeli.

La Fondation Cuomo soutient la modernisation des maisons d'éducation de la Légion d'honneur

28 Sep 2023

En cette nouvelle année scolaire 2023, la Fondation Cuomo a décidé d’apporter son soutien financier à l'une des initiatives menées par la grande chancellerie de la Légion d'honneur au sein du lycée de Saint-Denis…

De l'Argentine à l'Italie : les bénéficiaires de la Fondation Cuomo distingués comme modèles d’eco-citoyenneté

14 Sep 2023

La ville italienne d'Aquila, capitale de la région des Abruzzes, vient de recevoir le label "Municipalité cycliste", une distinction décernée à l'échelle nationale par la Fédération italienne pour l'environnement et le cyclisme.

Alessandro D'Aiutolo : lauréat du « Prix Spécial Fondation Cuomo » au concours Premio Roma Danza

04 Aoû 2023

Originaire de Salerno, Italie, Alessandro D'Aiutolo a remporté le Prix Spécial Fondation Cuomo au dernier « Premio Roma Danza ».

Le concours international Premio Roma Danza 2023

17 Juil 2023

La XXI édition du Prix Roma Danza, Concours International dédié à l’interprétation et à la vidéo danse, vient de se conclure lors de la soirée de Gala le 12 juillet dernier.

La participation du scientifique soutenu par la Fondation Cuomo au programme «Re.Generation»

13 Juil 2023

Lle Docteur Pedro Fernandez participe à une résidence de deux semaines, organisée par la Fondation Prince Albert II de Monaco.

Lancement du programme «Re.Generation» dont la Fondation Cuomo est partenaire

30 Juin 2023

Un campus d'été visant à former les futurs dirigeants en les sensibilisant à tous les enjeux majeurs du monde actuel.

«Les pieds sur terre et la tête dans les étoiles !»

30 Juin 2023

La remise de l’équipement de l’expédition himalayenne de l'association niçoise « Exploits sans frontières »

Rapport d'activités 2022

23 Juin 2023

…En tant que présidente de la Fondation Cuomo, j’ai la responsabilité d’offrir une plateforme pouvant promouvoir et faciliter des engagements significatifs en faveur des communautés et des individus, dans l’espoir de créer un changement positif dans le monde…

La 11ème saison du Tournoi Sainte-Dévote

24 Avr 2023

L’édition 2023 du Challenge Sainte Dévote s’est déroulée le 22 avril dernier au Stade Louis II de Monaco.

Construire l’avenir : l’expérience de l’école verte en Inde du Sud

21 Mar 2023

Nous célébrons cette année le quatrième anniversaire de notre école pilote construite dans la région rurale de Mambakkam, Tamil Nadu, Inde du Sud.

Le GIEC dévoile son 6ème rapport sur le changement climatique

20 Mar 2023

Focus sur les travaux de Siméon Diedhiou, lauréat du programme de bourse de la Fondation Cuomo.

« Nous vivons sur une planète où nous avons tout ce dont on a besoin, mais sommes-nous en train de la détruire »

09 Mar 2023

Interview avec Mahmoud Bab Ly, artiste plasticien sénégalais et professeur d’arts plastiques

Le Centre Cardio-Pédiatrique Cuomo de Dakar célèbre son 1 000e enfant opéré

06 Mar 2023

Le 28 février dernier, le centre Cardio-Pédiatrique Cuomo de Dakar (CCPC ) a célébré le 1 000e enfant opéré à cœur ouvert.

La Fondation Cuomo contribue à l’organisation du Tournoi Sainte Dévote 2023 avec un apport de 15 000 €

31 Jan 2023

Depuis 2013, le cumul total de notre appui financier à la Fédération Monégasque de Rugby s’élève à 90 040 €.

La Fondation Cuomo invitée d’honneur à la remise des Prix pour les lauréats de la 31° Edition du Concours international de Piano « Roma » 2022

24 Nov 2022

Le 16 novembre dernier s’est déroulée, à Rome, la finale du 31° Concours international de piano « Roma » organisé par le Maestro Crudeli.

Une nouvelle œuvre d’Aziz N’Diaye entre au Palais présidentiel du Sénégal

15 Nov 2022

Le plasticien sénégalais Aziz N’Diaye, ami de longue date de la Fondation Cuomo, vient de réaliser une œuvre d’art commanditée, auprès de la Manufacture nationale des tapisseries (MSAD) de Thiès, par la Présidence de la République du Sénégal.

Le Gouvernement Princier et la Fondation Cuomo s’engagent en faveur de l’enfance vulnérable au Burkina Faso

14 Nov 2022

La Direction de la Coopération Internationale du Gouvernement Princier et la Fondation Cuomo ont signé un protocole d’entente pour soutenir les enfants et jeunes en situation de rue…

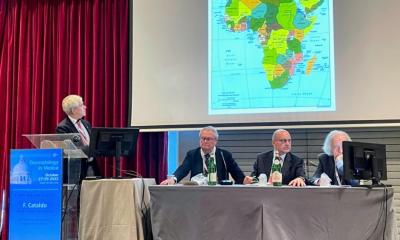

Le Dr Daniel Cataldo invité à une conférence médicale internationale à Venise

07 Nov 2022

Lors de la conférence médicale internationale « Dermatologie à Venise », qui s’est tenue du 27 au 29 octobre à l’hôtel NH, Laguna Palace, le Docteur Daniel Cataldo, spécialiste de la chirurgie reconstructive du visage, a fait une présentation. Intitulée « Cancrum oris, mon expérien...

Etudiante sénégalaise, soutenue par la Fondation Cuomo depuis 2012, admise à l’Université de Nantes

25 Oct 2022

En 2012, alors âgée de 16 ans et souffrant d’une insuffisance mitrale, Awa Gueye, collégienne sénégalaise, a bénéficié d’une mission humanitaire organisée par la Fondation Cuomo et La Chaîne de l’Espoir à Dakar.

La Fondation Cuomo participe à la publication et à la diffusion d’un ouvrage médical

04 Oct 2022

La Fondation Cuomo a apporté son soutien financier au Docteur Daniel Cataldo pour la publication et la diffusion de son ouvrage « Facial Reconstructive Surgery ».

La Fondation Cuomo apporte son soutien à « Entreparents », un réseau d’informations pour les récents et futurs parents

08 Sep 2022

« Entreparents » se veut un nouveau modèle d’accompagnement et d’information pour les parents de la Principauté de Monaco et de ses environs, avant et après la naissance de leur bébé.

Retour en présence du 8 au 12 juillet pour la 20ème édition du « Premio Roma Danza » avec le soutien de la Fondation Cuomo

26 Juil 2022

Après deux éditions organisées en ligne et à distance, le Prix est enfin revenu sur la scène du Teatro Grande.

« Le rapport moral », extrait du Rapport d’activités 2022 de la Fondation Cuomo

22 Juil 2022

En temps normal, la Fondation et tous nos partenaires – qui ont contribué ou ont été soutenus par nos actions et initiatives pendant toutes ces années – auraient fêté cet anniversaire avec joie…

« Des citoyens informés construisent un monde meilleur » – les travaux du scientifique brésilien plébiscités par la presse locale

11 Juil 2022

« EducAir : alerter les populations sur les risques des fumées d’incendies »

Le chercheur chinois, soutenu par la Fondation Cuomo, met le cap sur le « troisième pôle »

27 Juin 2022

Chercheur chinois, Dr Dongfeng Li, doctorants de la sélection 2019-2021 du Programme de bourses du GIEC, reçoit une nouvelle fois notre soutien.

La Fondation Cuomo soutient « Babel », un opéra participatif produit par l’Opéra Nice Côte d’Azur

21 Juin 2022

La Fondation Cuomo apporte son soutien à « Babel », un opéra dit « participatif », où les protagonistes sont de jeunes écoliers niçois, recrutés spécialement pour l’occasion.

La Fondation Cuomo soutient un nouveau projet de recherche sur la déforestation du Gran Chaco argentin

02 Juin 2022

Une recherche post-doctorale proposée par l’ex-bousier du GIEC, Dr Pedro Fernandez et menée au sein du Laboratoire de biogéographie de l’Université Humboldt, Berlin.

L’édition 2022 des « Itinéraires artistiques de Saint-Louis » – une exposition d’arts visuels soutenue par la Fondation Cuomo

31 Mai 2022

La 8ème édition des « Itinéraires artistiques de Saint-Louis » se tient jusqu’au 10 juin prochain à Saint-Louis, ville portuaire du nord du Sénégal.

Malgré la crise sanitaire, le projet sur les energies renouvelables piloté par l’Université Las Tunas (Cuba) et soutenu par la Fondation Cuomo avance…

14 Mar 2022

La mise en place d’un Centre de Formation en energies renouvelables

Rome : Le concours « Premio Roma Danza » soufflera cette année ses 20 bougies

10 Mar 2022

Depuis 2019, la Fondation Cuomo soutient cet événement culturel en qualité de son sponsor principal.

Les travaux scientifiques du Dr. Dongfeng Li cités dans le récent rapport du GIEC

04 Mar 2022

Dongfeng Li a obtenu son doctorat en septembre 2021 en soutenant une thèse intitulée « La réponse des flux d’eau et de sédiments au changement climatique dans les rivières des hauts plateaux asiatiques ». Ce projet de recherche, soutenu entre 2019 et 2021 par la Fondation Cuomo, a bénéfic...

Surveillance de la qualité de l’air en temps réel pour assurer le bien-être des communautés dans l’Amazonas

25 Fév 2022

Un projet de recherche soutenu par la Fondation Cuomo…

La Fondation Cuomo invitée à une table ronde sur la santé à l’Expo Universelle de Dubaï

03 Fév 2022

Une journée consacrée à la santé et au bien-être…

La doyenne de la Cour royale de Tiébélé (Burkina Faso) sacrée « Trésor Humain Vivant »

21 Déc 2021

Un site patrimonial burkinabé de grande importance, soutenu par la Fondation Cuomo

Troisièmes Assises Monégasques de l’Autisme et du Handicap Mental

07 Déc 2021

La Fondation Cuomo est partenaire de l’association Monaco Disease Power depuis 2012

La Fondation Cuomo récompense les lauréats du « Prix Chopin » à l’occasion de la 30e édition du Concours international de piano « Roma » 2021

17 Nov 2021

C’est au théâtre Palladium de l’université Roma Tre que s’est déroulée, le 16 novembre 2021, la 30e cérémonie de remise des prix du concours international de piano « Roma ».

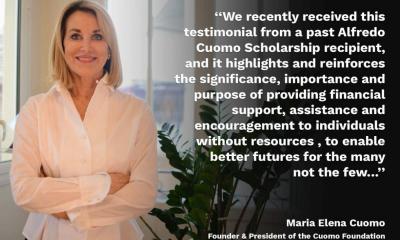

« La Fondation Cuomo m’a aidé à poursuivre une carrière et à avoir une identité pour moi… »

08 Nov 2021

Merci pour toute l’aide que vous m’avez apportée, ainsi qu’aux milliers et milliers d’étudiants comme moi…

Les étudiants chercheurs de la promotion 2021/23 du Programme de bourses du G.I.E.C. réunis à Monaco

02 Nov 2021

Les étudiants chercheurs de la nouvelle promotion du programme de bourses du GIEC (Groupe d’experts intergouvernemental sur l’évolution du climat) se sont rendus à Monaco le 28 octobre dernier pour recevoir leurs diplômes. La remise a eu lieu le 29 octobre 2021 au Grimaldi Forum, Monaco, dan...

La prestigieuse revue internationale SCIENCE publie l’étude d’un chercheur chinois soutenu par la Fondation Cuomo

02 Nov 2021

Le 29 octobre 2021, la célèbre revue SCIENCE a publié le dernier article du Dr Dongfeng Li et de son groupe de recherche intitulé « Augmentation exceptionnelle des flux de sédiments fluviaux dans les hautes terres asiatiques de plus en plus chaudes et humides ». Créé en 1880 par Thomas Edis...

L’Association Fryderyk Chopin, en partenariat avec la Fondation Cuomo, inaugure la 30e Edition du Concours International de Piano « Roma »

29 Oct 2021

Le 28 octobre dernier s’est tenue la conférence de presse consacrée à l’ouverture du la 30e Concours International de piano « Roma ».

Le succès des programmes de bourses d’études dans les écoles de la Fondation Cuomo de l’Inde du Sud (Tamil Nadu) : les témoignages des étudiants…

22 Oct 2021

Depuis 2004, la Fondation Cuomo offre des bourses d’études aux étudiants les plus prometteurs des écoles qu’elle finance dans le Tamil Nadu, Inde du sud. Grâce à ce projet, plus de 500 jeunes adultes ont réussi, à ce jour, à entrer dans la vie active avec confiance. Presque tous ont trou...

Le film documentaire de Pedro Fernandez, chercheur, sur les écosystèmes du Gran Chaco, Argentine

20 Oct 2021

Les études menées par Pedro Fernandez attestent que le sylvopastoralisme pourrait enfin fédérer l’économie régionale et l’écosystème du Gran Chaco…

Les Salons musicaux du Maestro Marcella Crudeli

08 Oct 2021

Ce fut une belle journée remplie de musique et d’enthousiasme ce samedi 2 octobre dans le magnifique Cloître de la Confrérie de San Giovanni Battista dei Genovesi, dans le quartier Trastevere à Rome.

Burkina Faso : la Fondation Cuomo et l’Association Zeine agissent ensemble pour l’autonomie financière des déplacés internes

14 Sep 2021

Partenaire de la Fondation Cuomo depuis 2010, l’Association Zeine, (Gorom-Gorom, Burkina Faso) a entrepris un nouveau projet en faveur des déplacés internes du nord du Burkina Faso.

Réouverture des écoles et des universités dans le Tamil Nadu, sud de l’Inde

06 Sep 2021

Conformément aux directives du gouvernement du Tamil Nadu, les écoles et les établissements d’enseignement supérieur ont rouvert leurs portes le 1 septembre 2021 en suivant des règles sanitaires strictes. Les classes concernées vont du IXe au XIIe « standards » (les élèves de 15 à 18 a...

Le jeune chercheur Brésilien, soutenu par la Fondation Cuomo, prolonge son étude de doctorat en une initiative pour protéger la santé publique

02 Sep 2021

Si l’impact de la déforestation et des feux de forêt amazoniens sur la biodiversité, le climat et l’économie demeurent des sujets largement médiatisés, celui sur la santé publique l’est beaucoup moins. Partant de ce constat, Igor Oliveira Ribeiro, chercheur brésilien soutenu depuis 20...

Alfredo Cuomo Higher Sec. School à Sendivakkam devient un établissement labellisé « Fit India »

16 Juil 2021

L’école secondaire Alfredo Cuomo Higher Sec. School à Sendivakkam (Tamil Nadu, Inde du Sud), construite en 2005 et soutenue depuis cette date par la Fondation Cuomo, a obtenu le label « Fit India ».

Une formule « hybride » pour la XIX édition du concours Premio Roma Danza

15 Juil 2021

La XIXe édition du concours de danse « Premio Roma Danza » s’est tenue du 8 au 12 juillet 2021 au sein de l’Accademia Nazionale di Danza à Rome.

Le Maestro Marcella Crudeli reçoit l’Ordre du mérite de la République italienne

17 Juin 2021

Le Maestro Marcella Crudeli, célèbre pianiste internationale, reçoit pour sa brillante carrière la médaille de « Grand officier du Mérite de la République italienne ».

English

English